Nowadays, the industry uses deep learning algorithms to train convolutional neural networks (CNNs) in order to achieve more intelligent mobile devices, which may require a new approach to designing processor architectures. However, clever use of existing technology can also help us move further into devices with true intelligent cognitive capabilities and fully redefine the user experience.

Nvidia used automotive and advanced driver assistance systems (ADAS) as a focus of application at the March GPU Technology Conference, and with Elon Musk's comments and comments, I hope that the challenge of self-driving cars has almost been completely overcome. On the other hand, over time, coupled with some adjustments and improvements aimed at reducing power consumption, I see that many technologies and applications such as ubiquitous 3D perception, 3D tracking, and image search are rapidly entering smartphones. And other embedded systems powered by power or battery.

Combined with sensors for detecting motion and audio signals, fast memory access, and efficient data processing methods, these systems can have true “cognitive†capabilities, even in the near future to create an artificial intelligence move. The platform of the device. At the same time, it's important to optimize existing architectures to implement “smart vision†features such as 3D depth maps and perception, object recognition and augmented reality, as well as some core computational imaging features such as image scaling, HDR, and image refocusing. , as well as shimmer image enhancement.

As many image processing and enhancements also use computer vision technology, the distinction between computer vision and image processing technology is becoming increasingly blurred. The most straightforward example is multi-frame image enhancements such as HDR, image scaling and refocusing – taking multiple consecutive images and then blending them together to get a higher quality picture.

Although we call it "image enhancement", this actually involves a lot of computer vision processing to "register" the image, completing the match between two or three frames. Now, users think that this basic function is taken for granted, but in fact it requires very powerful processing power, so that the demand for specialized, high-performance digital signal processing (DSP) will increase.

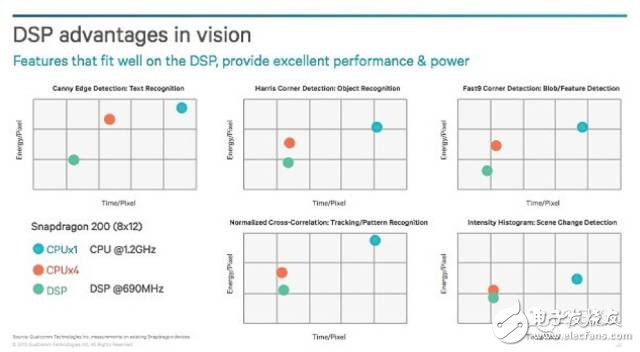

Qualcomm published an article on Uplinq 2013 that best describes pixel power and time relationships for a variety of different processing functions. The figure shows three processors, including a single-core CPU running at 1.2 GHz, a quad-core CPU, and a DSP running at 690 MHz.

Figure 1: Describes the processing power and time required by different processors to process each pixel, demonstrating the advantages of the DSP in conjunction with the CPU for visual processing. In order to optimize power consumption and performance, combining CPU, DSP and GPU may be the best overall approach.

The diagram shows that the DSP can run at the same clock speed that is only slightly more than half the CPU, providing the same performance in image processing, providing potential performance gain while saving more power (power = capacitance x voltage 2 x frequency) , or P=CV2xF).

However, as we move towards human-like vision, artificial intelligence, and augmented reality applications on mobile platforms, we may need to rethink the processing architecture we need. Combined with sensor fusion and advanced deep learning algorithms such as CNN, these highly advanced compute-intensive applications will provide a more context-aware and context-aware user experience, but with trade-offs in terms of battery life.

The challenge for designers is to implement intelligently aware devices while maintaining acceptable battery life on the other hand. There are several ways to achieve this. For example, a GPU from Qualcomm or Nvidia can be used to support the CPU. This has been implemented in many smartphones. However, the continuing mandatory requirement to reduce power consumption has driven us to decentralize specific processing-intensive functions to DSP processors optimized for visual processing. When dealing with object recognition and tracking, this method can save up to 9 times the power consumption compared to the most advanced GPU clusters available today.

However, even with this level of power consumption, mobile devices are unlikely to use face recognition for crowd search very quickly, as this feature is still too demanding for processing power. However, the advent of low-power processors and specially optimized processor architectures has given us hope that we are making real progress in this area. This type of progress is why MIT Technology Review called deep learning one of the ten technological breakthroughs in 2013. In addition to the demonstrations on GTC, Microsoft, Baidu, and Cognivue also showed some research results. Since then, there have been other long-term developments in this field.

In addition, Aziana (Australia) recently announced a merger with BrainChip (California), a company that specializes in implementing artificial intelligence in hardware and has focused on developing artificial intelligence for mobile platforms. While architectures that support powerful processing power and ultra-low-power processing are critical, as cloud connectivity becomes more pervasive and faster, it makes sense to allocate as much processing overhead as possible to the cloud. . This will move towards intelligent processing performance allocation. In the cloud to do the most suitable work in the cloud, in the mobile device to do the most suitable for mobile device processing, as efficiently as possible to allocate functions according to the architecture, such as using the CPU to allocate the load between the GPU and the DSP. In the words of Qualcomm, it is to use the right engine to do the right job.

48V15Ah Lithium Ion Battery,48V15Ah Lithium Battery Pack,Li-Ion 48V15Ah Lithium Battery Pack,Echargeable Lithium Battery Pack 48V

Jiangsu Zhitai New Energy Technology Co.,Ltd , https://www.zttall.com