While there may be general opposites, the 5G system is no longer just the focus of research topics or industry forums for major telecommunications companies. The reality is that major OEMs will deploy 5G systems in the next few years, which means that development is moving forward. For example, Ericsson has partnered with NTT DOCOMO to launch 5G services in Japan to support the 2020 Tokyo Olympics in a timely manner. Ericsson plans to showcase its 5G capabilities during the 2018 Winter Olympics in South Korea, this time in cooperation with South Korea's ST Telecom.

The upcoming 5G network technology-based data service will allow faster access to more data online. This immediacy of information will support many of today's advanced technology applications—such as autonomous vehicles and virtual reality or augmented reality systems—to save on locally stored data and instead rely on the cloud.

To make it efficient, network latency needs to be less than 1ms. Not only does this require a 5G infrastructure to be installed in the data center, but it also requires the data center to be close to the user and the cellular radio towers it serves – and if the data center is farther than 250 miles, it will be beyond the reach! Although the data still needs to reside upstream, they need to be accessible immediately at the edge of the network. This change potentially negates the tendency to locate data centers near power plants that provide significant energy needs, or in climates where cooling needs are low and therefore additional energy demand is low.

Part of the solution is the recent growth of the microdata center, which has a low data capacity but a large number that will be sufficient to support this more fragmented cloud infrastructure. Even so, the power supply of power companies may still be stretched, so it is more important that all available power capacity available in the data center can be effectively utilized and used. Again, this may not be a problem for microdata centers in the periphery of major cities with sufficient electricity, but there are problems with remote rural areas where infrastructure is still underdeveloped. These systems do not guarantee locked capacity simply because they are designed to meet peak demand or provide redundancy for mission critical activities.

That's why another part of the solution comes from a software-defined power supply (SDP) deployment based on a combination of hardware and software—it can intelligently and dynamically distribute power across the entire data center. However, before delving into the solution, let's take a closer look at the problem. Basically, the power distribution and management of traditional data centers involves three situations, resulting in their capacity requirements being over-provisioned and underutilized.

First, in a three- or four-level data center, 100% redundancy is required for mission-critical work. This means that every component in the power path—from externally powered and standby generators to uninterruptible power supplies (UPS) and power distribution units (PDUs) to server racks and servers—has been backed up. This is usually the case even if not all servers have backups (because they don't need to run mission-critical work). Therefore, if half of the data center's workload is non-critical, then half of the redundant power capacity for these servers is not required. That is, it's quite amazing that one-quarter of the total power capacity of the data center is idle because it has been allocated to these servers for backup, even if it is only theoretically available.

Then there are two situations in which the power supply needs to be adjusted to cope with the peak load. One situation is determined by CPU utilization and the type of task being performed, some of which inevitably require more frequent processing than other tasks. For example, Google has shown that the average peak power ratio of servers handling webmail is 89.9%, while the power of web search load is relatively low, at 72.7%. Therefore, if the power of all servers is assigned according to the processing of Web mail, it means that only the server used for web search will have at least 17% of the remaining power capacity.

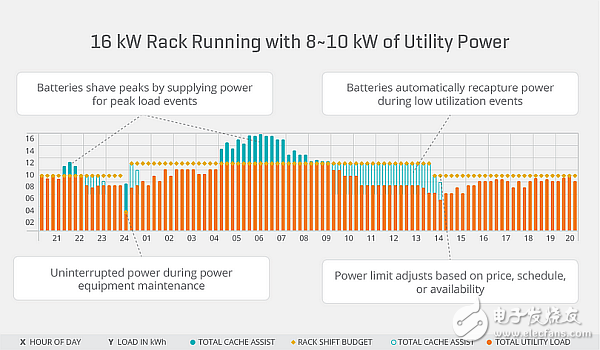

Another use case is that the load changes over time. It can be either a load pattern that occurs throughout the day or a highly dynamic change caused by the task being performed. For example, the actual power of a server rack may typically be 8-10 kW, but if the peak demand reaches 16 kW, then 16 kW of power is required.

As mentioned earlier, SDP provides a solution for power management in all data centers, both for traditional cloud computing and storage requirements, as well as for more flexible microdata centers required for low latency 5G applications. SDP supports everything from optimizing the voltage of the distributed power architecture within the server rack to dynamically managing the power supply and peaking. Peak shaving solves the problem of dynamic load changes whose peaks can be much higher than the nominal demand level. This ability to store energy into the battery during periods of low utilization allows for an instantaneous surge in power response on demand, thereby avoiding over-engineering the power supply capability, as shown.

Figure: The energy is stored in the battery during low power utilization, and the power surge can be instantaneously responded to as needed to avoid over-engineering the power supply capability.

Virtual Power Systems (VPS) and CUI have partnered to provide a specific solution, Intelligent Power Control (ICE). This complete power management capability can be deployed to existing and new data center equipment. It includes power switching from the CUI and Li-Ion battery storage modules as well as operating systems from the VPS. ICE can reduce the total cost of ownership of server power installations by up to 50% by freeing redundant power capacity from non-mission-critical systems, managing load distribution and maximizing utilization.

Wedge Prism can be used to deflect the laser beam at a certain angle alone, or combine the two together as beam deflection application. A single prism can offset the incident beam by a certain angle. If measured by diopter, it is a diopter, which is equivalent to an offset of 1cm at a distance of 1m

Ø can be used for beam deflection

Ø 0.5 ° - 15 ° beam deflection

Ø products without coating or antireflection coating are available

In addition, irregular prism pairs are also provided

Wedge prism can be used to deflect the laser beam at a certain angle alone, or combine the two together as beam deflection application. A single prism can offset the incident beam by a certain angle. If measured by diopter, it is a diopter, which is equivalent to an offset of 1cm at a distance of 1m

Two can be used as deformable prisms (to correct the elliptical beam output by the laser tube), or to set the beam at 4 θ Deflect at various angles within the range, θ It refers to the light deflected by a single prism. This beam shaping is realized by rotating two beams respectively, which is typically used to scan different positions in imaging.

Wedge Optical Window

Hanzhong Hengpu Photoelectric Technology Co.,Ltd , https://www.hplenses.com